As the end of my studies is nigh, I’m looking into possible topics for my master thesis. Alas, I’ve grown a bit tired of writing game engines and renderers lately — who’d have thought.

Luckily, this quest for new shores topics was solved quickly. I’ve also developed quite an interest in procedural generation and always had a bit of a fascination for fantasy worlds and maps. So, I’ve decided to work on procedural world generation.

This is obviously going to be a fun project with much potential for interesting failures.[1] Therefore, I have the best intentions of documenting my plans, progress and interesting problems here. With best intentions I do of course mean “force myself” and with documenting I mean “dumb my thoughts here and try to make sense of it all here”.

Without further ado: Welcome to this unwise cocktail of a forever project and a master thesis.

Related Work

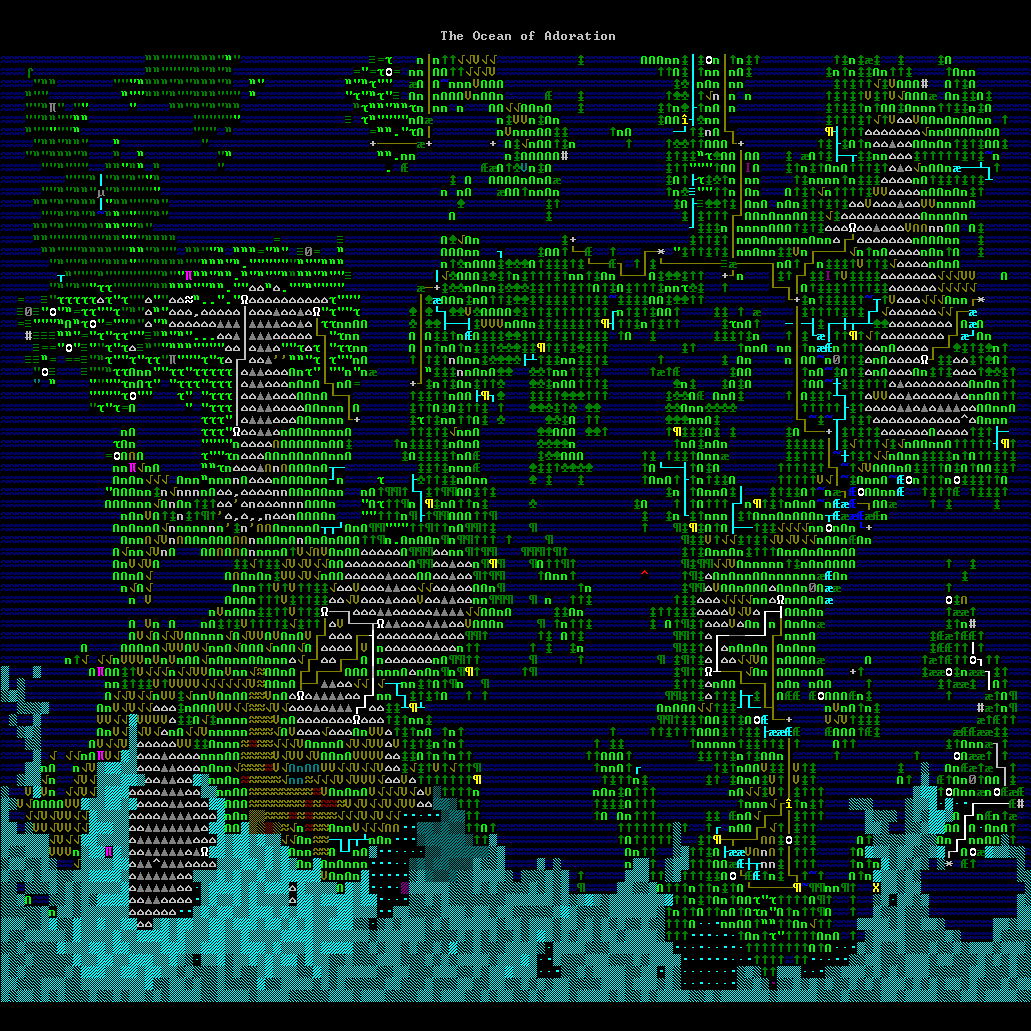

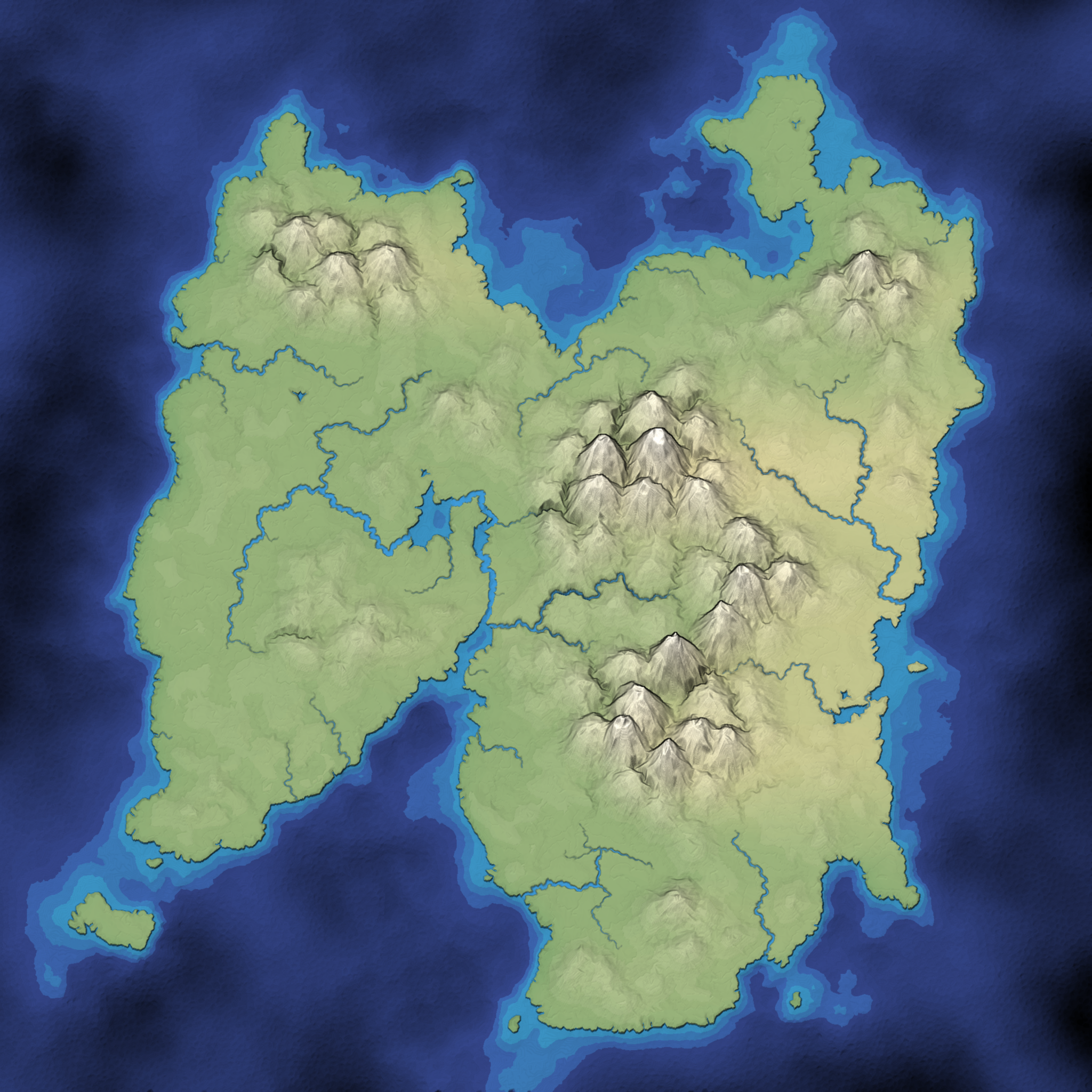

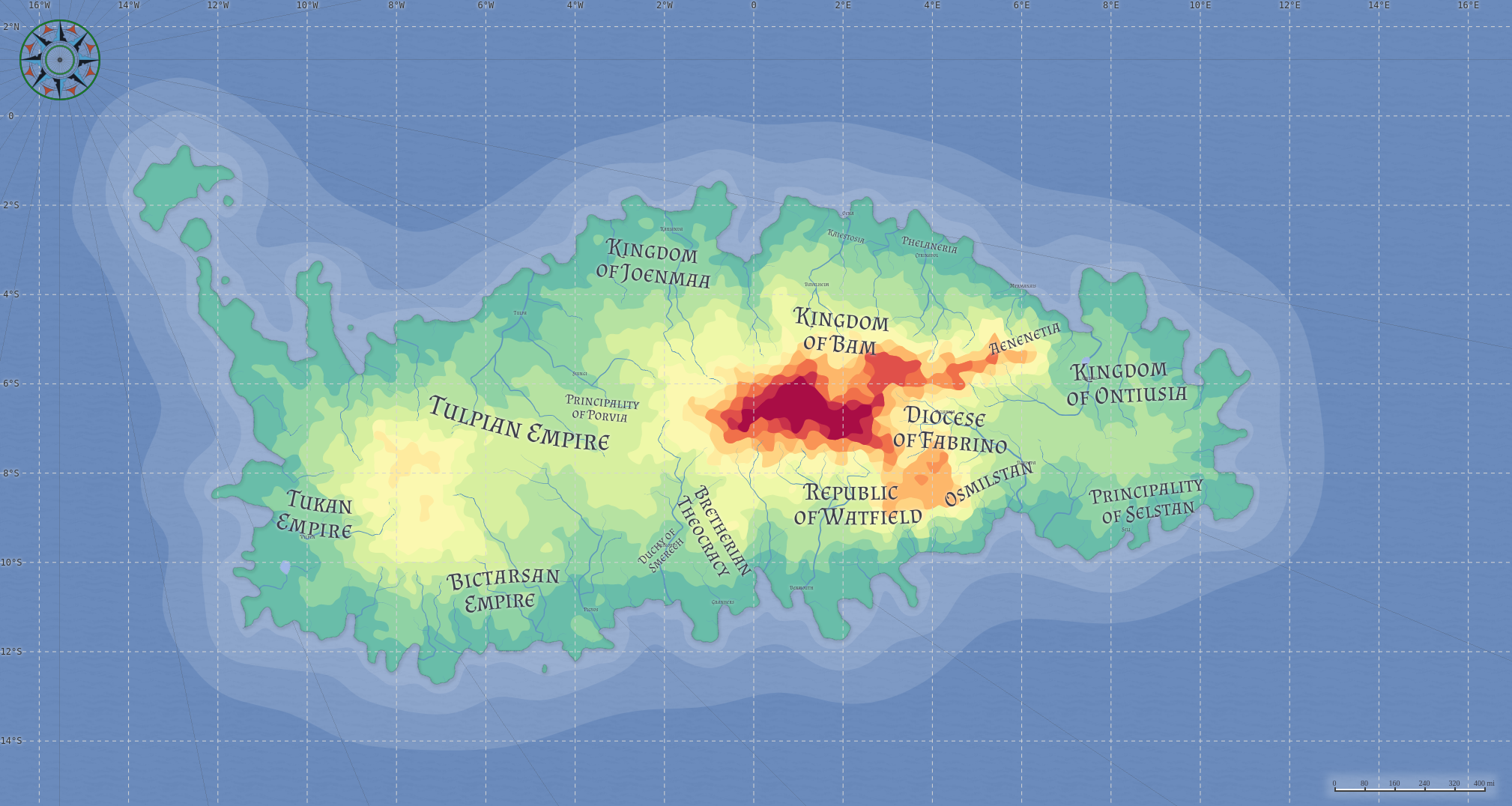

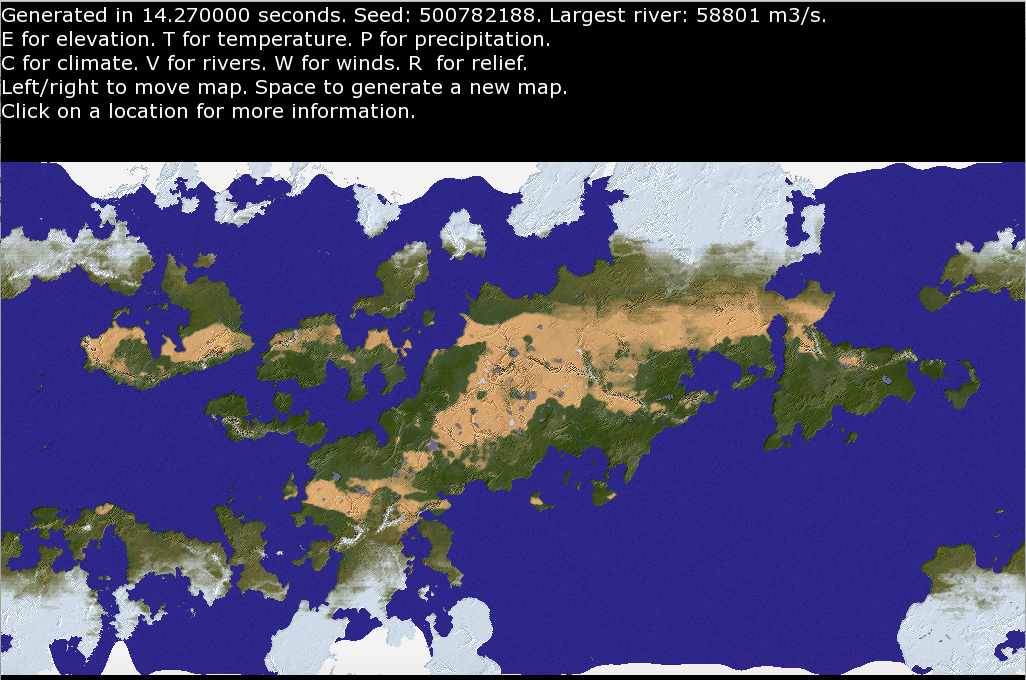

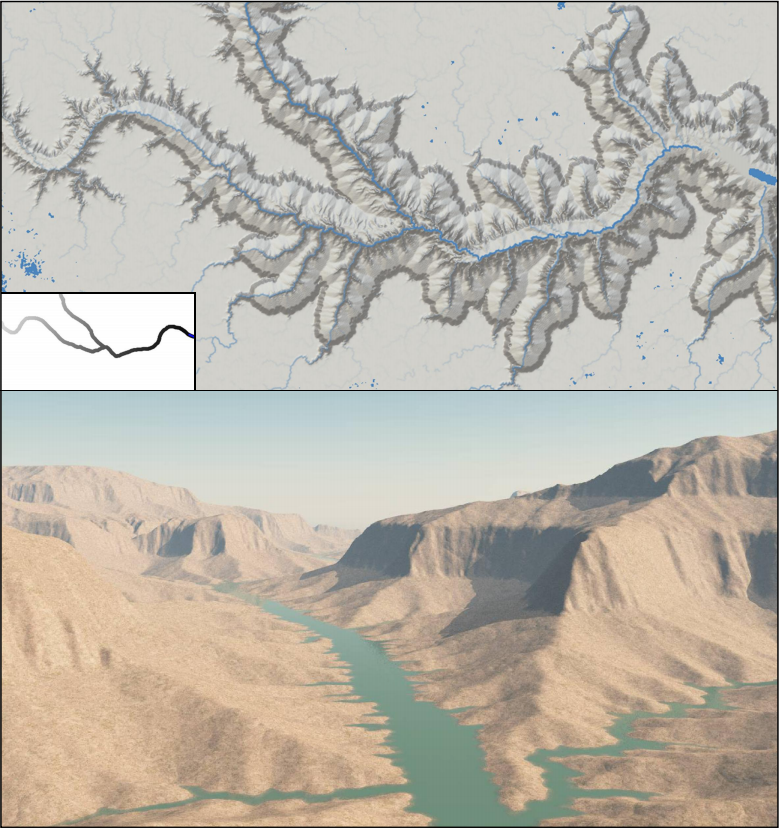

There already are quite a lot of inspiring projects that procedurally generate worlds and maps with impressive results — some of which I will briefly mention here. I’ve also uncovered some scientific papers, that I might use as a basis for my project. Although, unfortunately, most of these are sadly closed source projects.

Project Aims

The attentive reader might wonder: what exactly is it that I’m trying to achieve here?

The main focus of my project is pretty similar to that of Undiscovered Worlds. I’m interested in generating plausible worlds, that can be explored, used and processed further by others. Of course, I’ll also have to visualize my results in the form of maps and provide some form of user input, but that probably won’t be anything to fancy.

Apart from that, I have three main aims for this project guiding my design decisions.

Simulation Instead of Noise

As I’m mostly interested in the world generation aspect, I want to try to reduce the amount of random input and noise to a minimum and rely on simulations instead. A model based on a large amount of complexly layered noise might generate interesting worlds, but that is entirely thanks to how that noise is modulated instead of any real meaningful capabilities of the system. And as an effect of this, the generational space of such models is inherently limited to the small subset for which the parameters have been hand-tuned. As John von Neumann famously said:

With four parameters I can fit an elephant, and with five I can make him wiggle his trunk.

My goal is to create a more generalized system that can generate a wide variety of interesting worlds.

“Interesting” of course is subjective and means many different things to different people. Personally, I think one intriguing way to try to measure “interesting” in this context is in terms of the entropy of the generated world, i.e. its information density, uncertainty or “surprisingness”[2]. On one end of the spectrum, with an entropy near zero, we have an entirely flat featureless plane. Even if beige is your favorite color and your favorite ice cream flavor is “cold”, we can probably agree that this world wouldn’t be terribly “interesting”. On the other end, with a large entropy, we end up with a comprehensively incomprehensible world — where everything appears to be random noise because nothing is correlated.

Noise-based terrain (while often beautiful) would probably lie mostly on the low-entropy-end — think rolling hills, uniform mountains and general predictability — while our real world would be more on the high-end. The sweat spot I’m aiming for lies somewhere between these two — less predictable than Perlin noise, but also not as opaque and complex as the real world.

In other words, what I want to achieve here is a comprehensible amount of meaningful information in the generated worlds. Meaning every visible feature should have a cause that can be discovered by the user, e.g. matching coasts where continents split apart, canyons where rivers used to flow, settlements where they actually make sense with names based on their surroundings and history. Aside from the general technical difficulty, striking the right balance will also be a problem here: An unbroken chain of cause and effect is utterly meaningless if it’s too complex to be easily comprehensible. The generated world becomes no more interesting than one based purely on random noise.

Realistic Scale

My second central aim for this project is the scale at which I want to generate worlds. To support physically meaningful parameters and allow for an easier comparison with the real world, I’m planning to generate spherical worlds on roughly the same scale as the earth, i.e. a radius of about 6’000 km.

To support such a large scale with any amount of local details, I’m going to use a triangle mesh to store the elevation and other information. This should allow for a wide variety of resolutions based on local requirements (i.e., fewer vertices in the ocean and more near coasts and mountains). This should also solve most of the problems and artifacts, usually encountered when one tries to use uniform rectangular grids like bitmaps on a spherical surface.

I’m currently planning to develop my generator in a top-down fashion: starting with the largest terrain features (like tectonic plates and mountains) and moving on to smaller scale details like rivers, caves and settlements from there. Although the dynamic resolution of a triangle mesh should lend itself well to such a generation approach, I might come to a point in the future where I need to split the system into multiple generators for different scales (e.g., erosion at the scale of a continent or mountain range vs. the scale of a local river). However, that should also mesh quite well with the triangle mesh approach, as the small-scale-generator could use the vertices from the high-level generator, refine them further and generate new information matching the constraints determined by the existing vertices. There will also be many interesting problems there, like the usual problem with discontinuities at the border between cells, of course. But that is far enough in the future that I probably shouldn’t concern myself with it, just now. That is clearly future-me’s problem, who is much more experienced than me, anyway.

Reusability

My final aim for this project stems from an inkling that I might have bitten off more than I can chew here. Because of that, I want to build the project in a fashion that even in its incomplete state, parts of it should still be useful or at least interesting to others. To achieve this, I’ve not just open sourced (most) of the project but also plan to structure it as modular as possible.

I already laid the foundation for this with a basic C-API for the world-model and generation passes. While I’ll initially just work on a C++API-Wrapper to use in my code, the C-API core should also allow for future interoperability with other languages like Python or C#.

Based on this I plan to develop a simple graphical editor as a debugging tool and construct all the actual procedural generation passes as self-contained reusable modules, that can be loaded as plugins (.dll/.so) at runtime. So, others should (at least in theory) be able to modify (or salvage) any interesting parts, extend the system with new functionality or use it as a starting point for their own projects.

Outlook

With the goal in mind and the course set: what’s next?

While I would really like to dive directly into exploring ideas for procgen algorithms and interesting simulation approaches, I think I should document and discuss some of the groundwork, first. To do so, the next couple of posts will mostly focus on how the world is modeled, stored, and modified in the code[3].

Having that down, there are some (hopefully a bit lighter) topics, I would like to explore:

- Simulating plate tectonics to generate realistic high-level features (probably based on Procedural Tectonic Planets by Cortial et al.)

- Extending and refining the current API

- Creating a renderer to display the generated information in a compact way that is easy to decipher

- Creating easy to use tools to modify the generated worlds, both for debugging and artistic inputs

- Simulating large scale weather patterns to determine precipitation, average temperatures, and biomes

- Model drainage basins and high-level water flow to generate river systems

- Generate detail-maps for smaller areas, based on the high-level features from the coarser large-scale map

- Simulate coarse erosion based on this (maybe similar to Large Scale Terrain Generation from Tectonic Uplift and Fluvial Erosion by Cordonnier et al.)

- Simulate large scale changes in world climate (i.e., ice ages and glaciation) to reproduce some of the more interesting terrain features we have on earth: fjords, sunken landmasses, interesting features on continental shelves, …

- …